Automated Form Assistant with LLMs

This project automated the form-filling process using a document-driven AI pipeline that combines OCR, Retrieval-Augmented Generation with LLMs, and a chatbot interface to extract, validate, and populate user data into the form efficiently.

Project Overview

This project focused on automating the completion of the I-130 immigration form, a key step in the U.S. family-based green card application process. The solution was built as an end-to-end AI pipeline that uses OCR to extract text from uploaded documents, a Retrieval-Augmented Generation (RAG) approach with large language models to interpret and structure the data, and a chatbot interface to interactively gather any missing information. The extracted and validated data is formatted into a structured JSON file, which is then used to populate the I-130 form through a Python-based PDF filler. The entire process is integrated into a user-friendly interface, allowing individuals to upload documents, follow progress, and preview the completed form. This project demonstrated how LLMs and intelligent data extraction can streamline complex legal workflows and improve accessibility.

Literature Review

Automated form-filling agents are increasingly being adopted in modern workflows for their ability to streamline data entry and reduce manual errors, especially in environments that involve frequent document handling, such as government and business operations. These systems also enhance accessibility by assisting individuals who may struggle with completing forms or using digital platforms, as noted by Hegde et al. (2023). Recent advancements in research have contributed significantly to the development of intelligent form-filling technologies. For instance, Belgacem et al. (2023) demonstrated how a machine learning model trained on clean, structured data can accurately autofill categorical fields, leading to fewer errors and more efficient pipelines. Similarly, Liu et al. (2024) improved retrieval-based AI systems by enhancing their understanding of document layouts and content, enabling better navigation through complex forms.

Despite these advancements, challenges persist—particularly regarding the structure and quality of input forms. Biswas and Talukdar (2024) highlighted that document alignment plays a critical role in extraction accuracy, underlining the importance of robust preprocessing. Beyond data extraction, researchers like Hansen et al. (2019) have explored how AI can interpret form structure by recognizing elements like headers, tables, and fields, further supporting automation efforts. Many modern systems depend on OCR technology to extract text from scanned forms, but its reliability varies based on document characteristics such as font and image clarity, as observed by Mittal and Garg (2020) and Patel et al. (2012). These insights continue to shape the design of more accurate and adaptable form automation systems.

Methodology

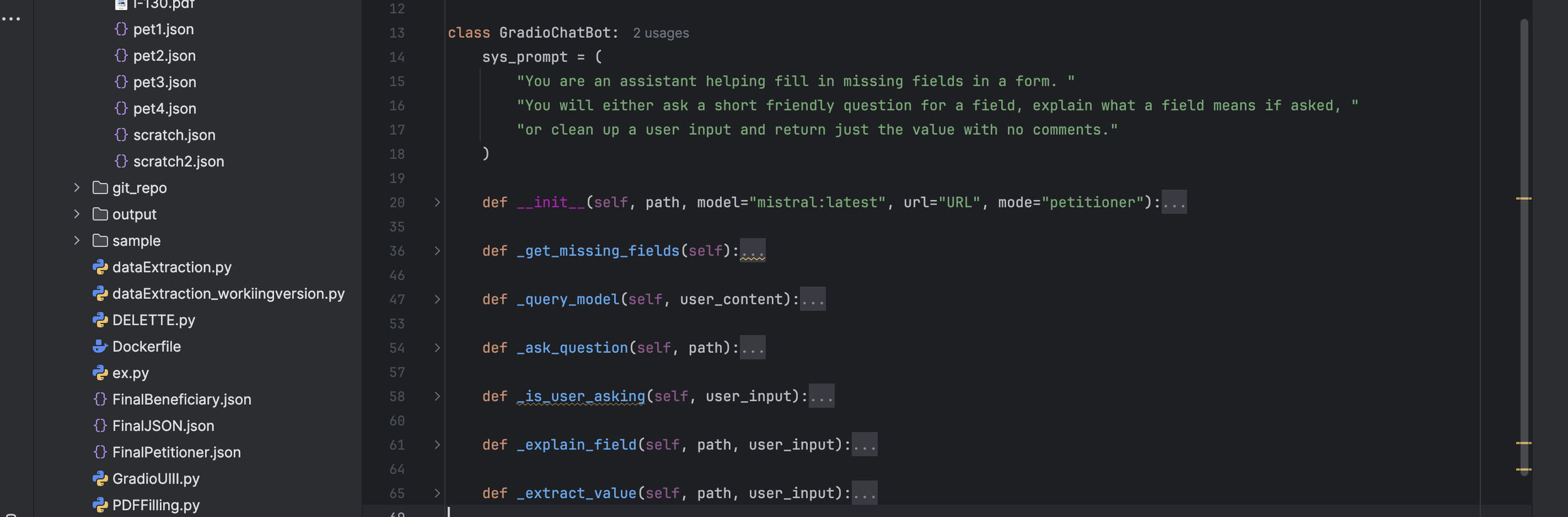

This project consists of two primary components: extracting data and filling out the PDF form. As illustrated in Figure 1, the data extraction process is broken down into three stages—document preprocessing, the use of a Retrieval-Augmented Generation (RAG) pipeline, and refinement of the extracted information through JSON correction. After the necessary data is gathered and structured, it is used to populate the I-130 immigration form.

Data Extraction

Data extraction mainly involves the usage of Haystack version 2.0, a RAG pipeline, and Ollama models. The documents (passports, certificates, and other forms) are extracted in two batches: one for the petitioner and one for the beneficiary. When the user uploads the documents through the REST API, text is extracted using various methods depending on the document type. If the documents are PDFs and contain only text, PDFReader is used. If they are PDFs but contain images, PDF2Image is used, and then Tesseract OCR is applied. If the documents are images, Tesseract OCR is directly applied. InMemoryDocumentStore, a simple document store, stores the extracted text.

Before moving forward to the RAG pipeline, pre-formatted prompts are used for both the petitioner and the beneficiary to ensure consistency in JSON output. These prompts tell the model what to look for in the documents and how to extract those fields.

PDF Filing

A key part of the project involved determining the specific information required to complete the I-130 form and identifying which of those details could be extracted from user-provided documents. To support this, a structured JSON template was created based on the layout of the form. This template helped organize the extracted data clearly, making it easy to track which fields were already filled and which were still missing. A sample version of this template is included in Appendix A. Additionally, constraints related to checkboxes and dropdown fields were defined within the template and enforced during the data extraction process to ensure consistent formatting.

Each field in the I-130 form was linked to a corresponding key in the structured JSON template, allowing the form-filling script to determine what information was needed for each field. The pypdf library was chosen for this task because it supports all field types and preserves the form structure when saving the final PDF (Pypdf 5.2.0 Documentation).

In the first method, a script reads a JSON file that follows the structured template produced during data extraction. The JSON is flattened into a dictionary, and each form field is checked against this dictionary. If a matching value is found, the field is populated—whether it's a text box, checkbox, or dropdown. If the field has no corresponding value, it is skipped and the process continues. Once completed, a new PDF is generated with the filled-in values. Since petitioner and beneficiary data are handled separately, the script is designed to process multiple JSON files for a single form.

The second method addresses any remaining empty fields. The script identifies which required fields are still blank and returns them to the chatbot interface for user input. Once the user provides the missing information, it is passed back in a simple JSON format, and the script uses this data to complete the final version of the form.

Result

Challenges & Takeaways

One of the main challenges in this project was managing model hallucinations during data extraction, particularly when using large language models to interpret partially structured or ambiguous inputs. Ensuring that the extracted data aligned accurately with the I-130 form’s requirements required prompt refinement and strict validation logic to catch and correct inconsistencies. Another key challenge was handling sensitive personally identifiable information (PII). To maintain privacy and ensure data security, a smaller, locally hosted language model was used instead of relying on external APIs or cloud-hosted solutions. This approach reduced risk while still allowing for effective document understanding and user interaction. A major takeaway from the project was the importance of combining strong model performance with robust data control, balancing accuracy, security, and usability in real-world AI systems.